export data from redshift to s3|Amazon Redshift to S3: 2 Easy Methods : Tagatay The COPY command uses the Amazon Redshift massively parallel processing . This Free Currency Exchange Rates Calculator helps you convert US Dollar to Philippine Peso from any amount. . Rates Table; Monthly Average; Historic Lookup; Home > Currency Calculator Exchange Rate US Dollar to Philippine Peso Converter. 1.00 USD = 56.51 6392 PHP. Sep 03, 2024 21:24 UTC. View USD Rates Table;

PH0 · Unloading data to Amazon S3

PH1 · Unloading Data from Redshift to S3 – Data Liftoff

PH2 · UNLOAD

PH3 · Tutorial: Loading data from Amazon S3

PH4 · Scheduling data extraction from AWS Redshift to S3

PH5 · RedShift Unload to S3 With Partitions

PH6 · Export data from AWS Redshift to AWS S3

PH7 · Export JSON data to Amazon S3 using Amazon Redshift UNLOAD

PH8 · Amazon Redshift to S3: 2 Easy Methods

PH9 · 4 methods for exporting CSV files from Redshift

Domain Seized

export data from redshift to s3*******Unloading data to Amazon S3. Amazon Redshift splits the results of a select statement across a set of files, one or more files per node slice, to simplify parallel reloading of the data. Alternatively, you can specify that UNLOAD should write the results serially to one .You can unload the result of an Amazon Redshift query to your Amazon S3 data .

The COPY command uses the Amazon Redshift massively parallel processing .

Peb 16, 2022 — You can use this feature to export data to JSON files into Amazon S3 from your Amazon Redshift cluster or your Amazon Redshift Serverless endpoint to make .You can unload the result of an Amazon Redshift query to your Amazon S3 data lake in Apache Parquet, an efficient open columnar storage format for analytics. Parquet format .

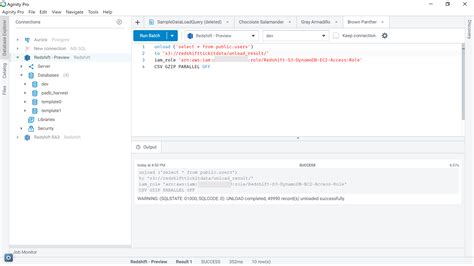

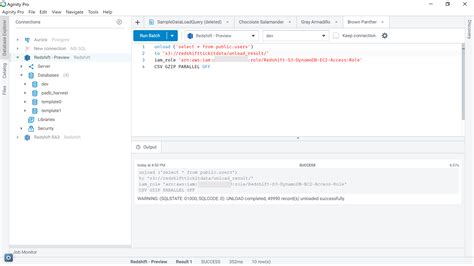

Set 16, 2021 — The basic syntax to export your data is as below. UNLOAD ('SELECT * FROM your_table') TO 's3://object-path/name-prefix' IAM_ROLE 'arn:aws:iam:::role/' CSV; On .

Set 3, 2021 — Following are the two methods that you can follow to unload your data from Amazon Redshift to S3: Method 1: Unload Data from Amazon Redshift to S3 using the UNLOAD command; Method 2: .

Nob 15, 2017 — Define pipeline with the following components: SQLDataNode and S3DataNode. SQLDataNode would reference your Redshift database and SELECT query to use to extract your data. .

Ago 27, 2019 — Redshift unload is the fastest way to export the data from Redshift cluster. In BigData world, generally people use the data in S3 for DataLake. So its important that we need to make sure the data in S3 .Amazon Redshift to S3: 2 Easy Methods Ago 27, 2019 — Redshift unload is the fastest way to export the data from Redshift cluster. In BigData world, generally people use the data in S3 for DataLake. So its important that we need to make sure the data in S3 .Ago 15, 2019 — The UNLOAD command is quite efficient at getting data out of Redshift and dropping it into S3 so it can be loaded into your application database. Another common use case is pulling data out of Redshift that .

The COPY command uses the Amazon Redshift massively parallel processing (MPP) architecture to read and load data in parallel from multiple data sources. You can load .May 28, 2024 — Move Data from Amazon S3 to Redshift with AWS Data Pipeline . Send data to Amazon Redshift with AWS Data Pipeline. AWS Data Pipeline is a purpose-built Amazon service that you can use to .

Dis 7, 2021 — After using Integrate.io to load data into Amazon Redshift, you may want to extract data from your Redshift tables to Amazon S3. There are various reasons why you would want to do this, for example: .Amazon Redshift stores these snapshots internally in Amazon S3 by using an encrypted Secure Sockets Layer (SSL) connection. . see CREATE TABLE and CREATE TABLE AS in the Amazon Redshift Database Developer Guide. Copying snapshots to another AWS Region. You can configure Amazon Redshift to automatically copy snapshots .Bonus Material: FREE Amazon Redshift Guide for Data Analysts PDF. Equally important to loading data into a data warehouse like Amazon Redshift, is the process of exporting or unloading data from it.There are a couple of different reasons for this. First, whatever action we perform to the data stored in Amazon Redshift, new data is generated.Ene 10, 2022 — We have our data loaded into a bucket s3://redshift-copy-tutorial/. Our source data is in the /load/ folder making the S3 URI s3://redshift-copy-tutorial/load. The key prefix specified in the first line of the command pertains to tables with multiple files. Credential variables can be accessed through AWS security settings.

export data from redshift to s3Peb 16, 2022 — After we added column aliases, the UNLOAD command completed successfully and files were exported to the desired location in Amazon S3. The following screenshot shows data is unloaded in JSON format partitioning output files into partition folders, following the Apache Hive convention, with customer birth month as the partition .

Hul 29, 2020 — Load data from AWS S3 to AWS RDS SQL Server databases using AWS Glue: Load data into AWS Redshift from AWS S3: Managing snapshots in AWS Redshift clusters: Share AWS Redshift data across accounts: Export data from AWS Redshift to AWS S3: Getting started with AWS RDS Aurora DB Clusters: Saving AWS Redshift .Peb 8, 2018 — I would like to unload data files from Amazon Redshift to Amazon S3 in Apache Parquet format inorder to query the files on S3 using Redshift Spectrum. I have explored every where but I couldn't find . Just to clarify - the blogpost mentioned is about copying INTO Redshift, not out to S3. – mbourgon. Commented Sep 24, 2019 at 16:01. .I'm trying to COPY or UNLOAD data between Amazon Redshift and an Amazon Simple Storage Service (Amazon S3) bucket in another account. However, I can't assume the AWS Identity and Access Management (IAM) role in the other account.Use the COPY command to load a table in parallel from data files on Amazon S3. You can specify the files to be loaded by using an Amazon S3 object prefix or by using a manifest file. . The values for authorization provide the AWS authorization Amazon Redshift needs to access the Amazon S3 objects. For information about required permissions .Hun 7, 2017 — I don't believe there are any direct export methods from Redshift to other services that aren't S3. That being said, there are tools such as AWS Data Pipeline you could use to create a workflow to transfer data between services, but you'll probably need to do a bit of extra work. –

Abr 4, 2014 — I'm trying to move a file from RedShift to S3. Is there an option to move this file as a .csv? Currently I am writing a shell script to get the Redshift data, save it as a .csv, and then upload to S3. I'm assuming since this is all on AWS services, they would have an argument or something that let's me do this.

Peb 22, 2020 — You can leverage Hevo to seamlessly transfer data from S3 to Redshift in real time without writing a single line of code. Hevo’s Data Pipeline enriches your data and manages the transfer process in a fully .

export data from redshift to s3 Amazon Redshift to S3: 2 Easy Methods Dis 3, 2019 — A data warehouse is a database optimized to analyze relational data coming from transactional systems and line of business applications. Amazon Redshift is a fast, fully managed data warehouse that makes it simple and cost-effective to analyze data using standard SQL and existing Business Intelligence (BI) tools. To get information from .Hun 15, 2023 — COPY table_name [column_list] FROM data_source CREDENTIALS access_credentials [options] The table_name is the target table and must already exist in Redshift. The column_list is optionally used to mention the specific columns of the table_name you’d like to map the incoming data. The data_source includes the path to .Dis 7, 2022 — With the support for auto-copy from Amazon S3, we can build simpler data pipelines to move data from Amazon S3 to Amazon Redshift. This accelerates our data product teams’ ability to access data and deliver insights to end users. We spend more time adding value through data and less time on integrations.”

You can use the COPY command to load (or import) data into Amazon Redshift and the UNLOAD command to unload (or export) data from Amazon Redshift. You can use the CREATE EXTERNAL FUNCTION command to create user-defined functions that invoke functions from AWS Lambda.Ago 30, 2016 — A few days ago, we needed to export the results of a Redshift query into a CSV file and then upload it to S3 so we can feed a third party API. Redshift has already an UNLOAD command that does just .

To buy lottery tickets online in India, follow these 10 steps: Check out the available lotteries above. Find out which sites are selling the cheapest tickets. Press the name of the site to visit the lotto site. Once on the site, register a personal account.

export data from redshift to s3|Amazon Redshift to S3: 2 Easy Methods